For a decade, augmented and virtual reality promised the future. Most of us saw slick demos, a few pilots, and lots of nausea. Then something changed. Headsets got lighter. Pass‑through video became sharp and color‑true. Phones learned to scan rooms in minutes. New file formats and compression made 3D models load fast on the web. And AI began to help reconstruct spaces at near‑photoreal speed. The result: spatial computing is finally usable for real jobs.

This guide cuts through the hype and shows what actually works today. We’ll focus on pass‑through AR (mixed reality you see through cameras), fast 3D capture, dependable alignment in the real world, and practical workflows that anyone can pilot in a few weeks. You will not find a hardware buying list or a futurist essay. You will find steps, patterns, pitfalls, and a plan.

What Changed: The Practical Enablers

Spatial computing got several quiet upgrades. Each one matters; together they turn “neat demo” into “reliable tool.”

Pass‑Through That Looks Real

Long ago, pass‑through video was grainy and laggy. Today’s headsets render high‑resolution, color‑accurate scenes with low latency. You can read labels, see depth, and trust edges. That means you can safely annotate a machine or place a 3D model on a real table and not feel like you’re guessing. Optical see‑through glasses still exist, but they struggle with brightness and occlusion outdoors. For indoor work and training, pass‑through AR is the safer bet.

Modern Spatial Input

Hand tracking and refined controllers let you grab, pinch, and press virtual elements with confidence. Gaze and pinch can replace a mouse when your hands are full. Voice is useful for commands and dictation in noisy settings if you add a push‑to‑talk or wake word with feedback. The rule: pick one primary input and keep gestures simple.

Better on‑device compute

Dedicated neural and vision processors in headsets and phones accelerate SLAM (simultaneous localization and mapping), scene meshing, occlusion, and hand tracking. You get steadier anchors and fewer drift issues. This reliability is crucial for work instructions, measurements, and design review.

3D Content Pipelines That Don’t Fight You

Projects used to drown in format hell. Now glTF and USD have matured. Tools export reliably. Compression shrinks assets without trashing quality. Texture formats preserve crisp text on labels. You can publish content to the web and avoid app store delays.

Reality Capture in Minutes, Not Days

Recent photogrammetry tools, phone LiDAR, and neural methods (like NeRFs and Gaussian splatting) make fast, accurate captures of rooms, machines, and props. Even if you end up with a simplified mesh for performance, the speed of capture accelerates iteration and reduces site visits.

Reality Capture: Turning Spaces Into Assets

Spatial apps work best when the digital and physical align. That starts with capturing the real world properly.

Capture Methods You Can Use Today

- Phone LiDAR scans: Newer iPhones and iPads build coarse meshes quickly. Great for layout, furniture, and basic occlusion. You’ll get good scale, decent alignment, and files small enough for web.

- Photogrammetry (standard cameras): Walk around, shoot overlapping photos, process into a mesh. Higher detail than phone LiDAR, but watch out for glossy or transparent surfaces. Works well for objects and small rooms.

- NeRFs and Gaussian splats: AI-based scene representations that look strikingly real with fewer photos. They’re getting interactive, but still require careful conversion for mainstream runtimes. Use them for references, context, or marketing‑quality walkthroughs, and export simplified meshes for real‑time overlays.

Best Practices for Accurate Capture

- Control scale: Place a known‑size marker (e.g., a meter stick) in frame and enforce that measurement during processing.

- Lock lighting: Keep lighting consistent. Avoid strong reflections; they create false features and hurt tracking.

- Reference points: Include 3–5 distinctive, nonreflective features in the scene. They help both reconstruction and later alignment.

- Keep files lean: Target under 300k triangles for mobile and under 1M for powerful headsets. Use LODs for larger spaces.

Formats That Play Well With Headsets

- glTF 2.0: The “JPEG of 3D.” Great runtime format with materials, animations, and compression. Ideal for web and cross‑platform native apps.

- USD/USDZ: Powerful for scenes, variants, and pipelines. Use it when you wrangle many assets and need non‑destructive edits.

- Point clouds (E57, PLY): Good for surveys and ground truth. Convert to meshes or splats for real‑time use. Avoid shipping raw heavy point clouds to headsets unless you stream and cull aggressively.

For textures, BasisU or ASTC give compact size and fast loading. Use texture atlases to cut draw calls. Keep text at least 20–22 px at typical viewing distance to stay readable.

Anchors and Alignment: Where Digital Meets Physical

Anchors tie content to the world. Do not skip this layer.

- Local world anchors: Created on device. Fast and private. Good for temporary sessions with fixed reference objects.

- Cloud anchors / geospatial anchors: Persist across sessions and devices. Useful for multi‑user collaboration and repeat visits. Audit privacy policies and retention.

- Fiducials (AprilTags, QR): Reliable, low‑tech anchors. Place discreet markers where absolute alignment matters (machine panels, entry points). They also help re‑lock drift during long sessions.

Use at least two independent alignment sources for critical overlays. If one drifts, the other catches it.

Designing Interfaces That Don’t Fight Humans

Flat screens trained us to cram information everywhere. Spatial UIs reward restraint. Aim for clarity, not spectacle.

Choose World‑Fixed, Not Head‑Fixed, For Work

Floating HUDs are tempting. But world‑anchored panels near the task reduce motion sickness and eye strain. Keep panels at arm’s length. Avoid pinning panels to the face unless they’re minimal status badges.

Make Text and Labels Easy to Read

- High contrast, no fine serifs. Sans‑serif fonts render cleaner in headsets.

- Minimum sizes: 20 px for short labels, 28 px for instructions. Test while moving.

- Depth cues: Add subtle drop shadows and soft occlusion to help text “sit” in space.

Simple, Predictable Gestures

- Primary: Pinch to select, pinch‑and‑hold to drag, two‑hand stretch to scale.

- Fallback: A single controller with a trigger beats fancy hand postures for long sessions.

- Feedback: Always show a visual highlight and a tiny click sound or haptic for selection.

Safety and Comfort First

- Session length: 20–30 minutes per block for new users. Include planned breaks.

- Movement: Prefer “teleport” or short fades to reduce vection. In pass‑through AR, keep the frame rate high.

- Boundaries: Show a guardian grid at the edge of safe space. Never place UI just beyond a physical step or hazard.

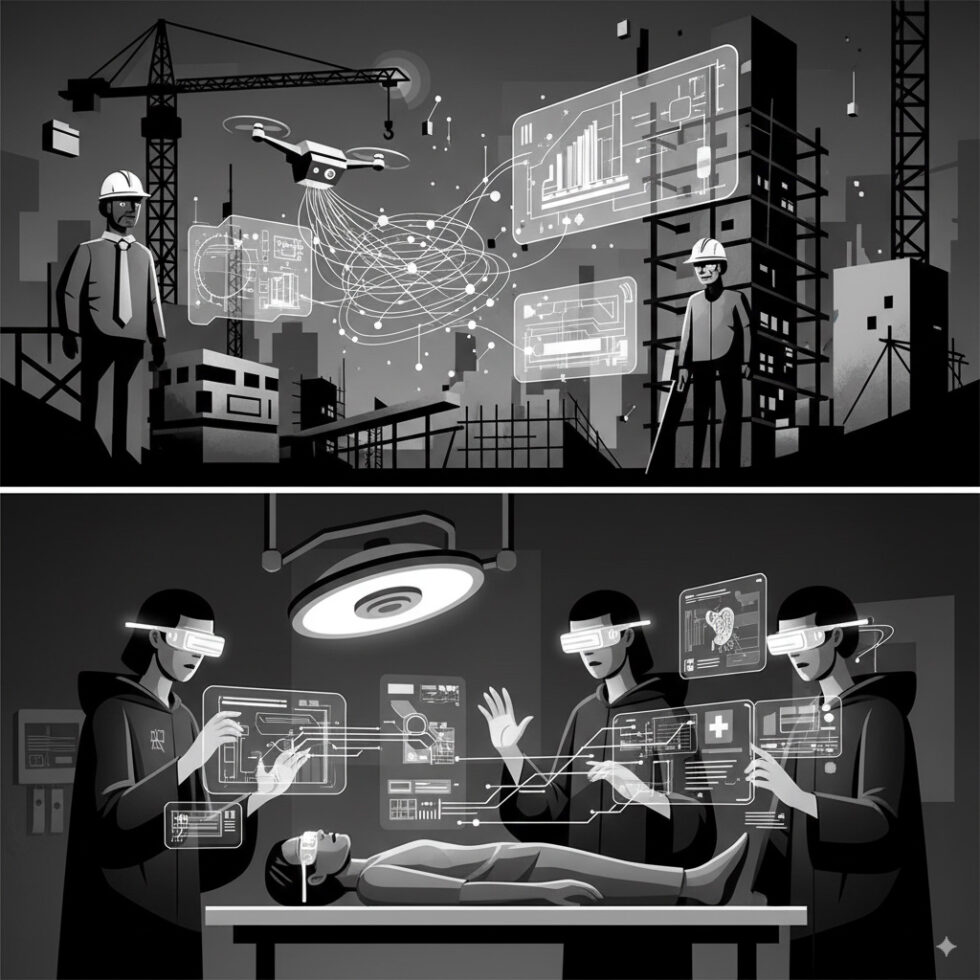

Where Spatial Computing Delivers ROI Today

Focus on repeatable workflows where a little 3D and context awareness saves minutes across many people. These are proven to stick:

Remote Assist With Shared Anchors

A technician on site sees the machine through the headset. An expert connects from a laptop, draws on the video feed, and drops anchored arrows that “stick” to parts. This cuts travel and reduces time to resolution. You will need a simple way to save snapshots and your annotations for records.

Guided Training and Work Instructions

Step‑by‑step overlays with photos, arrows, and short video loops reduce errors. Anchors keep instructions near the part. Add a “quick check” at each step with a photo confirmation and a checklist to build confidence and traceability.

Design Review in Context

Load the latest CAD model as a glTF, place it in the real environment, and walk around it. You catch clearance issues in minutes. Keep meshes to LODs; use simplified materials; focus on dimensions and interactions over realism.

Warehouse Picking and Slotting

Use pass‑through AR to show pick bins, highlight the next item, and show the shortest route. The value is in reduced mispicks and less travel distance. Pair with handheld scanners or simple controller input for confirmation. Stabilize anchors with fiducials on aisles to avoid drift over long distances.

Touring and Visitor Guidance

Museums, campuses, and venues can use geospatial anchors and sign‑posted fiducials to place helpful overlays. Keep it optional and light: context cards, subtle arrows, and a “tell me more” gesture.

Field Inspection and Documentation

Annotate defects in place, capture photos aligned to tagged coordinates, and export a report automatically. The key win is less transcription and fewer missed items. Use voice dictation for notes when hands are busy.

Metrics That Matter

Pick measurable outcomes before you build. A few examples:

- Remote assist: First‑time fix rate, mean time to resolution, expert travel avoided.

- Training: Error rate per step, completion time, rework rate after training.

- Design review: Issues found per hour, change requests avoided before fabrication.

- Warehouse: Picks per hour, mispicks per 1,000 lines, average route distance.

- Inspection: Findings per hour, missed defects in audit, time from capture to report.

Set a baseline, run a 2–4 week pilot, and compare. If your metrics don’t move, simplify the UI or choose a different task.

Build a Pilot in Weeks, Not Months

Start small and iterate. Here’s a sequence that works:

Week 1: Scope and Capture

- Pick one workflow with a motivated team and a clear metric.

- Capture the space with a phone LiDAR scan or photogrammetry. Measure one known distance for scale validation.

- Place fiducials where you need precise alignment. Map their coordinates in a simple spreadsheet.

Week 2: First Interactive Prototype

- Import the scan into a basic scene. Add a world‑anchored panel with the first three steps of your workflow.

- Implement selection via pinch and highlight. Add an obvious “next” button in reach.

- Test anchoring with a second person. Walk the space and see if panels drift.

Week 3–4: Iterate and Measure

- Refine anchor strategy: Combine cloud anchors and fiducials for stability.

- Trim content: Compress textures, reduce triangles, add LODs. Aim for 60–90 FPS.

- Run a small pilot: 5–10 users, 20–30 minutes each. Collect timing, errors, and feedback.

Security and IT: Don’t Get Blocked Later

Spatial apps touch cameras, microphones, and local maps. That makes IT cautious. Prepare answers early.

- Device management: Enroll headsets and phones with your MDM. Define camera permissions per app.

- Data boundaries: Separate PII and media. Keep raw video local when possible. Send only annotations and metrics to the cloud.

- Offline mode: Support spotty connectivity. Cache assets and fail gracefully when anchors can’t resolve.

- Audit logs: Log anchor creation, annotations, and media exports for traceability.

Content Pipeline That Scales

Think of 3D content like a miniature web stack.

Authoring and Conversion

- Author in USD or native DCC (Blender, Maya, 3ds Max), then export to glTF for runtime.

- Compress geometry with Draco, textures with BasisU/ASTC. Use texture atlases to cut materials.

- Automate checks: Max poly count, naming conventions, unit scale, PBR material sanity.

Delivery and Versioning

- CDN delivery: Host assets behind a CDN. Version file names to bust caches predictably.

- Feature flags: Toggle new UI or content per team while piloting. Roll back quickly.

- Metrics hook: Track asset load time, anchor resolution time, frame rate.

Performance Budgets

- Triangles: Under 300k per view for mobile‑class devices. Use LODs for big scenes.

- Materials: Keep it under 20 per scene. Merge where possible.

- Draw calls: Batch aggressively. Avoid animated shaders unless necessary.

- Foveation: Use foveated rendering if available. It’s free FPS if tuned well.

Web or Native?

You don’t have to pick one forever. Start where your constraints suggest.

- WebXR: Lower friction. No app store, easy updates, great for pilots and content viewing. Limited access to some sensors and OS‑level features.

- Native XR: Deeper integration, better performance, and access to advanced anchors, hand tracking, and system UI. More overhead to deploy and update.

Many teams prototype with WebXR and graduate to native for production features. Keep assets in the same formats so you can switch without a rewrite.

Team Roles You Actually Need

You can do a lot with a small team if you pick clear roles:

- XR developer: Builds interactions and anchoring. Knows either a native SDK or WebXR well.

- 3D artist/technical artist: Cleans meshes, bakes textures, enforces budgets.

- Spatial UX designer: Prototypes layouts in space, defines gestures, tests readability.

- Workflow owner: The person whose job you’re improving. They validate steps and success criteria.

Common Pitfalls (And How to Avoid Them)

- Over‑precision overlays: Sub‑millimeter alignment is unrealistic in dynamic settings. Use arrows and highlights, not pixel‑perfect masks.

- Lighting mismatch: Unlit, flat UI looks fake against real shadows. Add subtle ambient occlusion and match color temperature to the scene.

- Too much text: People won’t read paragraphs in a headset. Use bullets, icons, and short clips.

- Battery and heat: Plan 20–30 minute sessions and swap batteries or charge between crews.

- Ignored drift: Anchors will drift over time. Use fiducials or re‑localize regularly. Indicate alignment quality to the user.

Near‑Term Upgrades to Watch

These improvements are landing fast and will make pilots even easier:

- Better color pass‑through and depth sensors for cleaner occlusion and stable anchors.

- On‑device scene understanding: Real‑time plane detection, object recognition, and semantics to auto‑place UI.

- Shared spaces: Multi‑user sessions with consistent anchors and permissions across headsets and devices.

- Smarter reconstruction: Neural captures that export directly to runtime‑friendly meshes with PBR materials.

One‑Day Checklist: Your First Spatial Flow

- Pick one workspace and a single, measurable task (e.g., “replace filter,” “find bin A3”).

- Scan the space with a phone, place three fiducials where precision matters.

- Import scan, place a world‑anchored panel with three steps. Add pinch to advance.

- Test with two users, time it, note pain points. Fix the top two issues. Repeat.

Why Now Is Different

The core value of spatial computing is simple: context. Instead of asking a worker to imagine step three from a PDF, you put the step at the exact spot where it happens. Instead of discussing a design on a flat screen, you place it in the real room. You save minutes and prevent errors by removing translation in the brain.

We now have the ingredients to make that value accessible without years of R&D: pass‑through AR that is clear and low‑latency, fast capture, robust anchoring, and standard formats. With a small team and a focused scope, you can go from “cool demo” to “we use this every day” in a month.

Summary:

- Pass‑through AR, better sensors, and mature 3D formats make spatial computing viable for work today.

- Use quick reality capture (phone LiDAR, photogrammetry, or neural methods) and keep assets lean with glTF/USD and compression.

- Anchor content with a mix of local anchors, cloud/geospatial anchors, and fiducials for stability and repeatability.

- Design world‑fixed panels, readable text, simple gestures, and build in safety and comfort from the start.

- Target proven use cases: remote assist, guided training, design review, warehouse flows, tours, and inspections.

- Measure outcomes like error rates, time to resolution, and pick rates to prove ROI.

- Start with a 3–4 week pilot, iterate quickly, and plan for IT needs: device management, data boundaries, offline mode, and audit logs.

- Structure your content pipeline with budgets, compression, and CDN delivery, and choose WebXR or native based on constraints.